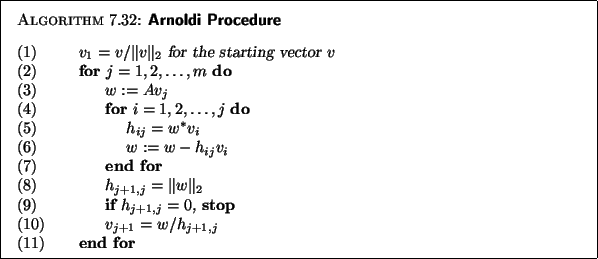

The above procedure

will stop if the vector ![]() computed in line (8) vanishes.

The vectors

computed in line (8) vanishes.

The vectors

![]() form an orthonormal system

by construction and are called Arnoldi vectors.

An easy induction argument shows that this system is a basis of

the Krylov subspace

form an orthonormal system

by construction and are called Arnoldi vectors.

An easy induction argument shows that this system is a basis of

the Krylov subspace ![]() .

.

Next we consider a fundamental relation between quantities

generated by the algorithm.

The following equality is readily derived:

As was noted earlier the algorithm breaks down when the norm of ![]() computed on line (8) vanishes at a certain step

computed on line (8) vanishes at a certain step ![]() . As it turns out,

this happens if and only if

the starting vector

. As it turns out,

this happens if and only if

the starting vector ![]() is a combination of

is a combination of ![]() eigenvectors (i.e.,

the minimal polynomial of

eigenvectors (i.e.,

the minimal polynomial of ![]() is of degree

is of degree ![]() ).

In addition, the subspace

).

In addition, the subspace ![]() is then invariant and

the approximate eigenvalues and eigenvectors are exact

[387].

is then invariant and

the approximate eigenvalues and eigenvectors are exact

[387].

The approximate eigenvalues

![]() provided by the

projection process onto

provided by the

projection process onto ![]() are the eigenvalues of the Hessenberg

matrix

are the eigenvalues of the Hessenberg

matrix ![]() . These are known as Ritz values.

A Ritz approximate eigenvector associated with a Ritz value

. These are known as Ritz values.

A Ritz approximate eigenvector associated with a Ritz value

![]() is defined by

is defined by

![]() , where

, where ![]() is an eigenvector associated with the eigenvalue

is an eigenvector associated with the eigenvalue

![]() . A number of the Ritz eigenvalues,

typically a small

fraction of

. A number of the Ritz eigenvalues,

typically a small

fraction of ![]() , will usually constitute good approximations

for corresponding eigenvalues

, will usually constitute good approximations

for corresponding eigenvalues ![]() of

of ![]() , and the quality of the

approximation will usually improve as

, and the quality of the

approximation will usually improve as ![]() increases.

increases.

The original algorithm consists of increasing ![]() until all desired

eigenvalues of

until all desired

eigenvalues of ![]() are found. For large matrices, this becomes

costly both in terms of computation and storage.

In terms of storage, we need to keep

are found. For large matrices, this becomes

costly both in terms of computation and storage.

In terms of storage, we need to keep ![]() vectors of

length

vectors of

length ![]() plus an

plus an ![]() Hessenberg matrix, a total of approximately

Hessenberg matrix, a total of approximately

![]() . For the arithmetic costs,

we need to multiply

. For the arithmetic costs,

we need to multiply ![]() by

by ![]() , at the cost of

, at the cost of ![]() , where

, where ![]() is number of nonzero elements in

is number of nonzero elements in ![]() ,

and then orthogonalize the result against

,

and then orthogonalize the result against ![]() vectors at the cost of

vectors at the cost of ![]() which increases with the step

number

which increases with the step

number ![]() . Thus an

. Thus an ![]() -dimensional Arnoldi procedure costs

-dimensional Arnoldi procedure costs

![]() in storage and

in storage and

![]() in arithmetic operations.

in arithmetic operations.

Obtaining the residual norm, for a Ritz pair,

as the algorithm progresses is fairly inexpensive.

Let ![]() be an eigenvector of

be an eigenvector of ![]() associated with the

eigenvalue

associated with the

eigenvalue

![]() , and let

, and let ![]() be the Ritz approximate

eigenvector

be the Ritz approximate

eigenvector

![]() .

We have the relation

.

We have the relation