Next: Error Bounds for Computed

Up: Stability and Accuracy Assessments

Previous: Residual Vector.

Contents

Index

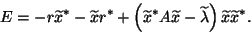

Transfer Residual Error to Backward Error.

There are Hermitian matrices  such that

such that  and

and  are an exact eigenvalue and

its corresponding eigenvector of

are an exact eigenvalue and

its corresponding eigenvector of  , i.e.,

, i.e.,

One such  is

is

|

(61) |

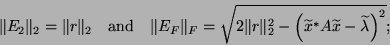

We are interested in such matrices  with smallest possible norms.

It turns out the best possible

with smallest possible norms.

It turns out the best possible  for the spectral norm

for the spectral norm  and the best possible

and the best possible  for Frobenius norm

for Frobenius norm  satisfy

satisfy

|

(62) |

see, e.g., [256,431].

In fact,  is given explicitly by (4.52).

So if

is given explicitly by (4.52).

So if  is small, the computed

is small, the computed  and

and  are an exact eigenpair of nearby matrices. Error analysis of

this kind is called backward error analysis, and

matrices

are an exact eigenpair of nearby matrices. Error analysis of

this kind is called backward error analysis, and

matrices  are backward errors.

are backward errors.

We say an algorithm that delivers an approximate

eigenpair

is

is

-backward stable for the pair with respect to the norm

-backward stable for the pair with respect to the norm

if it is an exact eigenpair for

if it is an exact eigenpair for  with

with  .

With this definition in mind,

statements can be made about the numerical stability of the algorithm which

computes the eigenpair

.

With this definition in mind,

statements can be made about the numerical stability of the algorithm which

computes the eigenpair

.

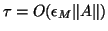

By convention, an algorithm is called backward stable

if

.

By convention, an algorithm is called backward stable

if

, where

, where  is the machine

precision.

is the machine

precision.

Next: Error Bounds for Computed

Up: Stability and Accuracy Assessments

Previous: Residual Vector.

Contents

Index

Susan Blackford

2000-11-20

![]() is

is

![]() -backward stable for the pair with respect to the norm

-backward stable for the pair with respect to the norm

![]() if it is an exact eigenpair for

if it is an exact eigenpair for ![]() with

with ![]() .

With this definition in mind,

statements can be made about the numerical stability of the algorithm which

computes the eigenpair

.

With this definition in mind,

statements can be made about the numerical stability of the algorithm which

computes the eigenpair

![]() .

By convention, an algorithm is called backward stable

if

.

By convention, an algorithm is called backward stable

if

![]() , where

, where ![]() is the machine

precision.

is the machine

precision.