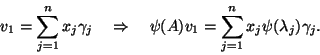

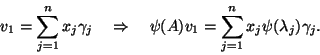

It is worth noting that if ![]() , then

, then ![]() and this

iteration is precisely

the same as the implicitly shifted QR iteration. Even for

and this

iteration is precisely

the same as the implicitly shifted QR iteration. Even for ![]() , the

first

, the

first ![]() columns of

columns of ![]() and the leading

and the leading ![]() tridiagonal submatrix of

tridiagonal submatrix of ![]() are

mathematically equivalent to the matrices that would appear in the

full implicitly shifted QR iteration using the same shifts

are

mathematically equivalent to the matrices that would appear in the

full implicitly shifted QR iteration using the same shifts ![]() In this sense, the IRLM may be viewed

as a truncation of the implicitly shifted QR iteration. The

fundamental difference is that the standard implicitly shifted QR iteration

selects shifts to drive subdiagonal elements of

In this sense, the IRLM may be viewed

as a truncation of the implicitly shifted QR iteration. The

fundamental difference is that the standard implicitly shifted QR iteration

selects shifts to drive subdiagonal elements of ![]() to zero from the

bottom up while the shift selection in the implicitly restarted Lanczos method

is made to drive subdiagonal elements of

to zero from the

bottom up while the shift selection in the implicitly restarted Lanczos method

is made to drive subdiagonal elements of ![]() to zero from the top down.

Of course, convergence of the implicit restart scheme here

is like a ``shifted power" method, while the full implicitly shifted

QR iteration is like an ``inverse iteration" method.

to zero from the top down.

Of course, convergence of the implicit restart scheme here

is like a ``shifted power" method, while the full implicitly shifted

QR iteration is like an ``inverse iteration" method.

Thus the exact shift strategy can be viewed both as

a means to damp unwanted components from the starting vector

and also as directly forcing the starting vector to be a

linear combination of wanted eigenvectors.

See [419] for information on the convergence of

IRLM and [22,421] for other possible shift strategies for

Hermitian ![]() The reader is referred to [293,334] for studies

comparing implicit restart with other schemes.

The reader is referred to [293,334] for studies

comparing implicit restart with other schemes.