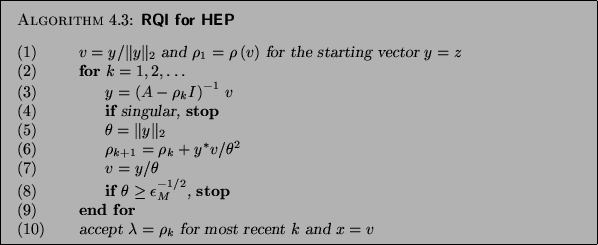

A natural extension of inverse iteration is to vary the

shift at each step. It turns out that the best shift which

can be derived from an eigenvector approximation ![]() is the Rayleigh quotient of

is the Rayleigh quotient of ![]() , namely,

, namely,

![]() .

.

In general, Rayleigh quotient iteration (RQI) will need fewer iterations to find an eigenvalue than

inverse iteration with a constant shift; it ultimately has cubical

convergence, while inverse iteration converges linearly. However, it

is not obvious how to choose the starting vector ![]() to make RQI

converge to any particular eigenvalue/eigenvector pair. For example,

the RQI can converge to an eigenvalue which is not the closest to the

starting Rayleigh quotient

to make RQI

converge to any particular eigenvalue/eigenvector pair. For example,

the RQI can converge to an eigenvalue which is not the closest to the

starting Rayleigh quotient ![]() and to an eigenvector which is

not closest to the starting vector

and to an eigenvector which is

not closest to the starting vector ![]() . Furthermore, there is the tiny

but nasty possibility that it may not converge to an

eigenvalue/eigenvector pair at all. RQI is more expensive than inverse

iteration, requiring a factorization of

. Furthermore, there is the tiny

but nasty possibility that it may not converge to an

eigenvalue/eigenvector pair at all. RQI is more expensive than inverse

iteration, requiring a factorization of ![]() at every

iteration, and this matrix will be singular when

at every

iteration, and this matrix will be singular when ![]() hits an

eigenvalue. Hence RQI is practical only if such factorizations can be

obtained cheaply at every iteration. See Parlett [353] for

more details.

hits an

eigenvalue. Hence RQI is practical only if such factorizations can be

obtained cheaply at every iteration. See Parlett [353] for

more details.