Previous: The special case of block tridiagonality

Up: Block factorization methods

Next: Blocking over systems of partial differential

equations

Previous Page: The special case of block tridiagonality

Next Page: Blocking over systems of partial differential

equations

One reason that block methods are of interest is that they are

potentially more suitable for vector computers and parallel

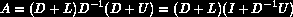

architectures. Consider the block factorization

where  is the block diagonal matrix of pivot blocks.

is the block diagonal matrix of pivot blocks.

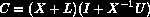

Making the transition to an incomplete factorization we can

replace the diagonal of pivots  by either the diagonal of

incomplete factorization pivots

by either the diagonal of

incomplete factorization pivots  , or the inverse of

, or the inverse of

, the diagonal of approximations to the inverses of

the pivots. In the first case we find for the incomplete factorization

, the diagonal of approximations to the inverses of

the pivots. In the first case we find for the incomplete factorization

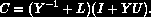

and in the second case

We see that for factorizations of the first type (which covers all

methods in Concus, Golub and Meurant [55]) solving a

systems means solving

smaller systems with the  matrices. For the second type (which

was discussed by Meurant [152], Axelsson and

Polman [20] and Axelsson and

Eijkhout [15]) solving

a system with

matrices. For the second type (which

was discussed by Meurant [152], Axelsson and

Polman [20] and Axelsson and

Eijkhout [15]) solving

a system with  entails multiplying by the

entails multiplying by the  blocks.

Therefore, the second type has a much higher potential for

vectorizability.

blocks.

Therefore, the second type has a much higher potential for

vectorizability.