Previous: Overview of the BLAS

Up: Contents

Next: Notation

Previous Page: Overview of the BLAS

Next Page: Notation

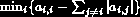

- Adaptive methods

- Iterative methods that collect information

about the coefficient matrix during the iteration process, and use

this to speed up convergence.

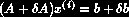

- Backward error

- The size of perturbations

of the

coefficient matrix and

of the

coefficient matrix and  of the right hand side of a linear

system

of the right hand side of a linear

system  , such that the computed iterate

, such that the computed iterate  is the

solution of

is the

solution of  .

.

- Band matrix

- A matrix

for which there are nonnegative

constants

for which there are nonnegative

constants  ,

,  such that

such that  if

if  or

or  . The

two constants

. The

two constants  ,

,  are called the left and right halfbandwidth

respectively.

are called the left and right halfbandwidth

respectively.

- Black box

- A piece of software that can be used without

knowledge of its inner workings; the user supplies the input, and

the output is assumed to be correct.

- BLAS

- Basic Linear Algebra Subprograms; a set of commonly

occurring vector and matrix operations for dense linear algebra.

Optimized (usually assembly coded) implementations of the BLAS exist

for various computers; these will give a higher performance than

implementation in high level programming languages.

- Block factorization

- See: Block matrix operations.

- Block matrix operations

- Matrix operations expressed in terms of

submatrices.

- Breakdown

- The occurrence of a zero divisor in an iterative

method.

- Choleski decomposition

- Expressing a symmetric matrix

as a

product of a lower triangular matrix

as a

product of a lower triangular matrix  and its transpose

and its transpose  ,

that is,

,

that is,  .

.

- Condition number

- See: Spectral condition number.

- Convergence

- The fact whether or not, or the rate at which, an

iterative method approaches the solution of a linear system.

Convergence can be

- Linear

: some measure of the distance

to the solution decreases by a constant factor in each iteration.

: some measure of the distance

to the solution decreases by a constant factor in each iteration.

- Superlinear

: the measure of the

error decreases by a growing factor.

: the measure of the

error decreases by a growing factor.

- Smooth

: the measure of the error

decreases in all or most iterations, though not necessarily by the

same factor.

: the measure of the error

decreases in all or most iterations, though not necessarily by the

same factor.

- Irregular

: the measure of the error

decreases in some iterations and increases in others. This

observation unfortunately does not imply anything about the ultimate

convergence of the method.

: the measure of the error

decreases in some iterations and increases in others. This

observation unfortunately does not imply anything about the ultimate

convergence of the method.

- Stalled

: the measure of the error

stays more or less constant during a number of iterations. As above,

this does not imply anything about the ultimate convergence of the

method.

: the measure of the error

stays more or less constant during a number of iterations. As above,

this does not imply anything about the ultimate convergence of the

method.

- Dense matrix

- Matrix for which the number of zero elements is

too small to warrant specialized algorithms to exploit these zeros.

- Diagonally dominant matrix

- See: Matrix properties

- Direct method

- An algorithm that produces the solution to a

system of linear equations in a number of operations that is

determined a priori by the size of the system. In exact arithmetic,

a direct method yields the true solution to the system. See:

Iterative method.

- Distributed memory

- See: Parallel computer.

- Divergence

- An iterative method is said to diverge if it does

not converge in a reasonable number of iterations, or if some

measure of the error grows unacceptably. However, growth of the

error as such is no sign of divergence: a method with irregular

convergence behavior may ultimately converge, even though the error

grows during some iterations.

- Domain decomposition method

- Solution method for linear systems

based on a partitioning of the physical domain of the differential

equation. Domain decomposition methods typically involve (repeated)

independent system solution on the subdomains, and some way of

combining data from the subdomains on the separator part of the

domain.

- Field of values

- Given a matrix

, the field of values is the

set

, the field of values is the

set  . For symmetric matrices this is the range

. For symmetric matrices this is the range

.

.

- Fill

- A position that is zero in the original matrix

but not

in an exact factorization of

but not

in an exact factorization of  . In an incomplete factorization,

some fill elements are discarded.

. In an incomplete factorization,

some fill elements are discarded.

- Forward error

- The difference between a computed iterate and the

true solution of a linear system, measured in some vector norm.

- Halfbandwidth

- See: Band matrix.

- Ill-conditioned system

- A linear system for which the

coefficient matrix has a large condition number. Since in many

applications the condition number is proportional to (some power of)

the number of unknowns, this should be understood as the constant of

proportionality being large.

- Incomplete factorization

- A factorization obtained by discarding

certain elements during the factorization process (`modified' and

`relaxed' incomplete factorization compensate in some way for

discarded elements). Thus an incomplete

factorization of a

matrix

factorization of a

matrix  will in general satisfy

will in general satisfy  ; however, one hopes

that the factorization

; however, one hopes

that the factorization  will be close enough to

will be close enough to  to function

as a preconditioner in an iterative method.

to function

as a preconditioner in an iterative method.

- Iterate

- Approximation to the solution of a linear system in any

iteration of an iterative method.

- Iterative method

- An algorithm that produces a sequence of

approximations to the solution of a linear system of equations; the

length of the sequence is not given a priori by the size of the

system. Usually, the longer one iterates, the closer one is able to

get to the true solution. See: Direct method.

- Krylov sequence

- For a given matrix

and vector

and vector  , the

sequence of vectors

, the

sequence of vectors  , or a finite initial part of

this sequence.

, or a finite initial part of

this sequence.

- Krylov subspace

- The subspace spanned by a Krylov sequence.

- LAPACK

- A mathematical library of linear algebra routine for

dense systems solution and eigenvalue calculations.

- Lower triangular matrix

- Matrix

for which

for which  if

if  .

.

factorization

factorization

- A way of writing a matrix

as a product

of a lower triangular matrix

as a product

of a lower triangular matrix  and a unitary matrix

and a unitary matrix  , that is,

, that is,

.

.

factorization /

factorization /  decomposition

decomposition

- Expressing a matrix

as a product of a lower triangular matrix

as a product of a lower triangular matrix  and an upper

triangular matrix

and an upper

triangular matrix  , that is,

, that is,  .

.

-Matrix

-Matrix

- See: Matrix properties.

- Matrix norms

- See: Norms.

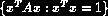

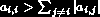

- Matrix properties

- We call a square matrix

- Symmetric

- if

for all

for all  ,

,  .

.

- Positive definite

- if it satisfies

for all nonzero

vectors

for all nonzero

vectors  .

.

- Diagonally dominant

- if

; the

excess amount

; the

excess amount  is called

the diagonal dominance of the matrix.

is called

the diagonal dominance of the matrix.

- An

-matrix

-matrix

- if

for

for  , and it is

nonsingular with

, and it is

nonsingular with  for all

for all  ,

,  .

.

- Message passing

- See: Parallel computer.

- Multigrid method

- Solution method for linear systems based on

restricting and extrapolating solutions between a series of nested

grids.

- Modified incomplete factorization

- See: Incomplete

factorization.

- Natural ordering

- See: Ordering of unknowns.

- Nonstationary iterative method

- Iterative method that has

iteration-dependent coefficients.

- Normal equations

- For a nonsymmetric or indefinite (but

nonsingular) system of equations

, either of the related

symmetric systems (

, either of the related

symmetric systems ( ) and (

) and ( ;

;  ). For complex

). For complex  ,

,  is replaced with

is replaced with  in the above

expressions.

in the above

expressions.

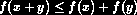

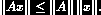

- Norms

- A function

is called a vector norm

if

is called a vector norm

if

for all

for all  , and

, and  only if

only if  .

.-

for all

for all  ,

,  .

.

for all

for all  ,

,  .

.

The same properties hold for matrix norms. A matrix norm and a

vector norm (both denoted  ) are called a mutually

consistent pair if for all matrices

) are called a mutually

consistent pair if for all matrices  and vectors

and vectors

- Ordering of unknowns

- For linear systems derived from a partial

differential equation, each unknown corresponds to a node in the

discretization mesh. Different orderings of the unknowns correspond

to permutations of the coefficient matrix. The convergence speed of

iterative methods may depend on the ordering used, and often the

parallel efficiency of a method on a parallel computer is strongly

dependent on the ordering used. Some common orderings for

rectangular domains are:

- The natural ordering; this is the consecutive numbering by

rows and columns.

- The red/black ordering; this is the numbering where all nodes

with coordinates

for which

for which  is odd are numbered

before those for which

is odd are numbered

before those for which  is even.

is even.

- The ordering by diagonals; this is the ordering where nodes

are grouped in levels for which

is constant. All nodes in

one level are numbered before the nodes in the next level.

is constant. All nodes in

one level are numbered before the nodes in the next level.

For matrices from problems on less regular domains, some common

orderings are:

- The Cuthill-McKee ordering; this starts from one point, then

numbers its neighbors, and continues numbering points that are

neighbors of already numbered points. The Reverse Cuthill-McKee

ordering then reverses the numbering; this may reduce the amount

of fill in a factorization of the matrix.

- The Minimum Degree ordering; this orders the matrix rows by

increasing numbers of nonzeros.

- Parallel computer

- Computer with multiple independent processing

units. If the processors have immediate access to the same memory,

the memory is said to be shared; if processors have private memory

that is not immediately visible to other processors, the memory is

said to be distributed. In that case, processors communicate by

message-passing.

- Pipelining

- See: Vector computer.

- Positive definite matrix

- See: Matrix properties.

- Preconditioner

- An auxiliary matrix in an iterative method that

approximates in some sense the coefficient matrix or its inverse.

The preconditioner, or preconditioning matrix, is applied in every

step of the iterative method.

- Red/black ordering

- See: Ordering of unknowns.

- Reduced system

- Linear system obtained by eliminating certain

variables from another linear system. Although the number of

variables is smaller than for the original system, the matrix of a

reduced system generally has more nonzero entries. If the original

matrix was symmetric and positive definite, then the reduced system

has a smaller condition number.

- Relaxed incomplete factorization

- See: Incomplete factorization.

- Residual

- If an iterative method is employed to solve for

in

a linear system

in

a linear system  , then the residual corresponding to a

vector

, then the residual corresponding to a

vector  is

is  .

.

- Search direction

- Vector that is used to update an iterate.

- Shared memory

- See: Parallel computer.

- Simultaneous displacements, method of

- Jacobi method.

- Sparse matrix

- Matrix for which the number of zero elements is

large enough that algorithms avoiding operations on zero elements

pay off. Matrices derived from partial differential equations

typically have a number of nonzero elements that is proportional to

the matrix size, while the total number of matrix elements is the

square of the matrix size.

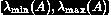

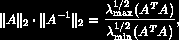

- Spectral condition number

- The product

where  and

and  denote the largest and

smallest eigenvalues, respectively. For linear systems derived from

partial differential equations in 2D, the condition number is

proportional to the number of unknowns.

denote the largest and

smallest eigenvalues, respectively. For linear systems derived from

partial differential equations in 2D, the condition number is

proportional to the number of unknowns.

- Spectral radius

- The spectral radius of a matrix

is

is

.

.

- Spectrum

- The set of all eigenvalues of a matrix.

- Stationary iterative method

- Iterative method that performs in

each iteration the same operations on the current iteration vectors.

- Stopping criterion

- Since an iterative method computes

successive approximations to the solution of a linear system, a

practical test is needed to determine when to stop the iteration.

Ideally this test would measure the distance of the last iterate to

the true solution, but this is not possible. Instead, various other

metrics are used, typically involving the residual.

- Storage scheme

- The way elements of a matrix are stored in the

memory of a computer. For dense matrices, this can be the decision

to store rows or columns consecutively. For sparse matrices, common

storage schemes avoid storing zero elements; as a result they

involve indices, stored as integer data, that indicate where the

stored elements fit into the global matrix.

- Successive displacements, method of

- Gauss-Seidel method.

- Symmetric matrix

- See: Matrix properties.

- Template

- Description of an algorithm, abstracting away from

implementational details.

- Tune

- Adapt software for a specific application and computing

environment in order to obtain better performance in that case only.

itemize

- Upper triangular matrix

- Matrix

for which

for which  if

if  .

.

- Vector computer

- Computer that is able to process consecutive

identical operations (typically additions or multiplications)

several times faster than intermixed operations of different types.

Processing identical operations this way is called `pipelining' the

operations.

- Vector norms

- See: Norms.

Previous: Overview of the BLAS

Up: Contents:

Next: Notation

Previous Page: Overview of the BLAS

Next Page: Notation

and

denote the largest and

smallest eigenvalues, respectively. For linear systems derived from

partial differential equations in 2D, the condition number is

proportional to the number of unknowns.